Core Operators

I’ve been reflecting on what it means to become a better prompt engineer. The field is expansive—prompt engineering for RAG applications? Better conversational interfaces? Building reliable agents? What does becoming a better prompt engineer even mean?

People talk about prompt engineering like a bag of tricks—the emphasis is on the special phrasing, templates, and intricate formatting. Staying current with new tips and techniques is essential, but these shortcuts are a bit like all those fancy symbols on a scientific calculator (√, xʸ, log, Σ). Most higher-order mathematical operations are derived from four core operators (+, −, ×, ÷). When it comes to prompt engineering, I want to know what the basic operators are.

Say you spoke to a prompt engineer tackling a complicated, real-world problem in a production-grade piece of software. How much of their work do you think is going to be based on clever prompting tricks versus clearly understanding what they’re trying to achieve and systematically breaking it down into manageable steps for the LLM?

In my experience so far, the bottleneck always comes back to how well you understand what you’re trying to do. Can you articulate how you do something, despite all its nuance and complexity, using plain language?

The litmus test is whether you can teach me —someone reasonably intelligent but unfamiliar with your specific domain—how to reliably perform the task you want done, at the standard you expect. If you can teach me, I can teach the machine.

I’m not talking about fancy prompting techniques here. I’m talking about breaking a complicated task down into a series of clear steps that result in the output you want.

The Bitter Lesson

If you're building anything that involves AI, then you have to know about the bitter lesson.

The bitter lesson is an article by Rich Sutton from March 13, 2019. This sounds like ancient AI lore, but it is only six years old.

Rich Sutton, a pioneer of reinforcement learning and a Turing Award winner, wrote a short essay on what 70 years in AI has taught him, and the best quote from it is also the first sentence.

"The biggest lesson that can be read from 70 years of AI research is that general methods that leverage computation are ultimately the most effective, and by a large margin."

In other words, as computation gets cheaper, you can throw more computing power at the problem, and it will outperform any of your optimisations.

It doesn't matter if you've found this crazy new prompting technique for improving your AI app.

Time spent optimising will ultimately be a waste. It's time that you could have spent elsewhere, waiting for the models to improve.

This is the bitter lesson.

Ultimately, we are all just wasting our time. Leveraging domain knowledge is pointless. Nothing really matters in AI research, apart from improving the power of the machines.

This is controversial, to say the least.

But if you see someone on the internet talking about the bitter lesson, now you know what they're talking about.

Every clever shortcut is tomorrow’s bottleneck

If AI models will eventually outperform every type of prompt optimisation or form of specialised domain knowledge, then how do we build systems that take this into account?

It's worth clarifying that if you actually read Sutton's incredibly short essay on the bitter lesson, he's not saying that leveraging domain knowledge is pointless.

He says "search" and "learning" are what AI systems do best (not search in the sense of retrieving information, but rather as a way of exploring a subject or dataset).

Our job, as AI engineers, is to carefully define what we want the system to search for and ultimately learn from — and then suppress any urge to explain how it should do those things.

If AI systems get faster and better over time, any implementation details are a form of premature optimisation. They constrain AI to our understanding of a domain and limit its ability to operate independently.

Tell the system what to look for (search) and what doing that well means (learning), and then get out of the way.

The goal is to be able to swap out all of your models and retrieval techniques. Everything (other than the system's core task definitions) should be flexible because those elements will improve faster than your domain understanding.

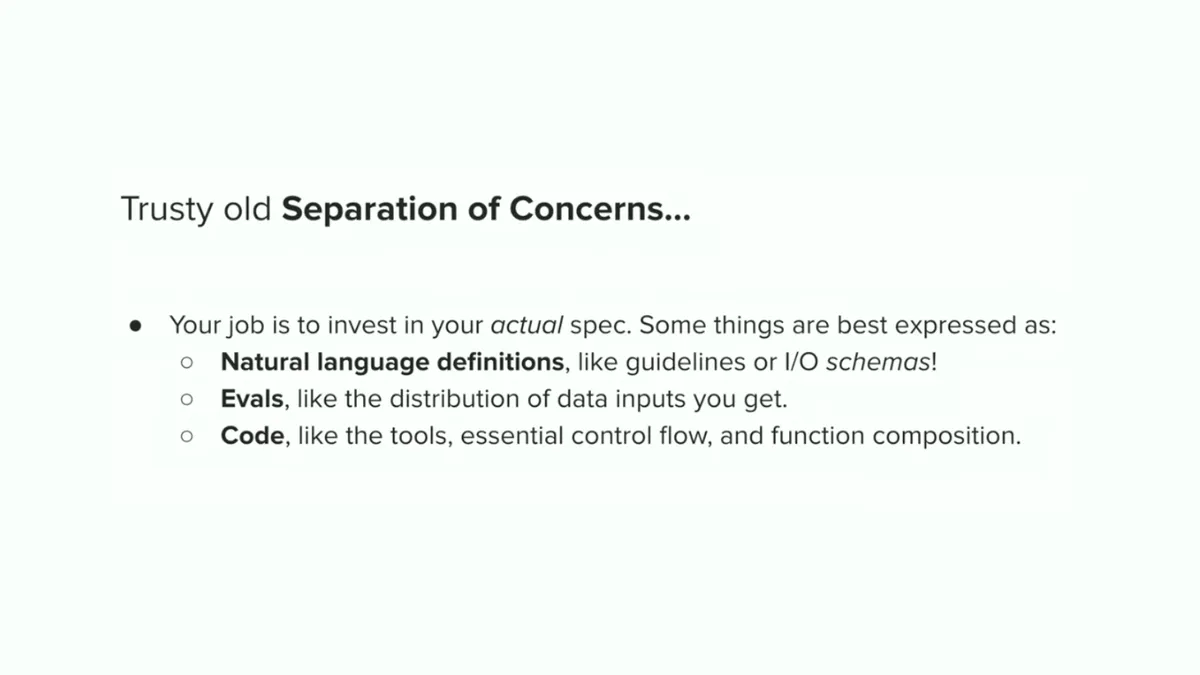

According to Omar Khattab, the guy who invented DSPy, your core task definitions (at the application layer) include:

Your natural language definitions of what your system handles - The most essential objects and methods in your prompts. Stuff you couldn't say in any other way. Separate out all forms of model appeasement, chaff like "I will tip you $1000 if you give me the best answer."

Your Evaluations, or your definition of the outcome/s you actually care about.

Your Code - tools, any essential control flow, or function composition. If your system can charge someone's bank account, you don't want any interpretation of what "a bank charge" means.

These are the core operators.

The fundamentals – the evergreen components that define your AI system. This is the answer to how we work with AI systems that will eventually outperform any type of prompt optimisation or specialised domain knowledge.

By keeping things as decoupled as possible and maintaining a clear separation of concerns, you set your AI engineering efforts up for future success. This allows you to maximise AI's intelligence rather than limiting it with a premature understanding of your own domain.

Stuff Mentioned...

- Omar Khattab - https://omarkhattab.com

- DSPy - https://dspy.ai

- Sutton's original 'Bitter Lesson' essay - https://www.cs.utexas.edu/~eunsol/courses/data/bitter_lesson.pdf

- Omar at AIE, explaining how to engineer AI systems that endure the Bitter Lesson - https://youtu.be/qdmxApz3EJI?si=ukKot8lII2HRn57e